Games in Artificial Intelligence Development

Introduction

We’ve come a long way from the stone age. 10,000 years agos our ancestors were throwing rocks at each other, today we throw our presuppositions across social media platforms at one another (this can be especially rough during an election year such as 2020). Despite all our social media pandering this new digital age has opened up many avenues for civility as well. Merging ancient stone playing games with futuristic cyber-electronics, we have the currently running tournament of the 2020 World Artificial Intelligence GO Competition. This competition is the preeminent, toe-to-toe, test of machine learning GO programs!

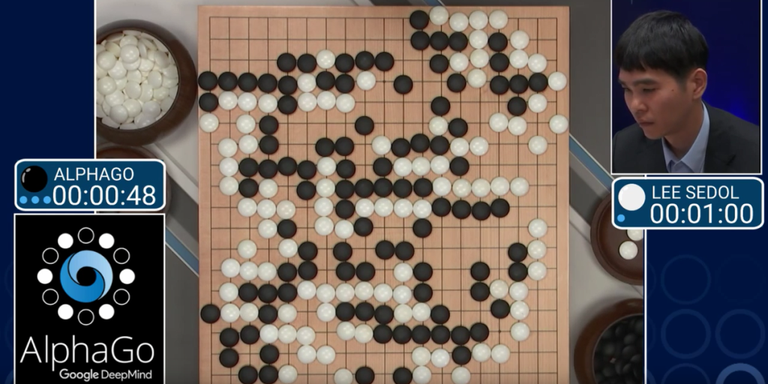

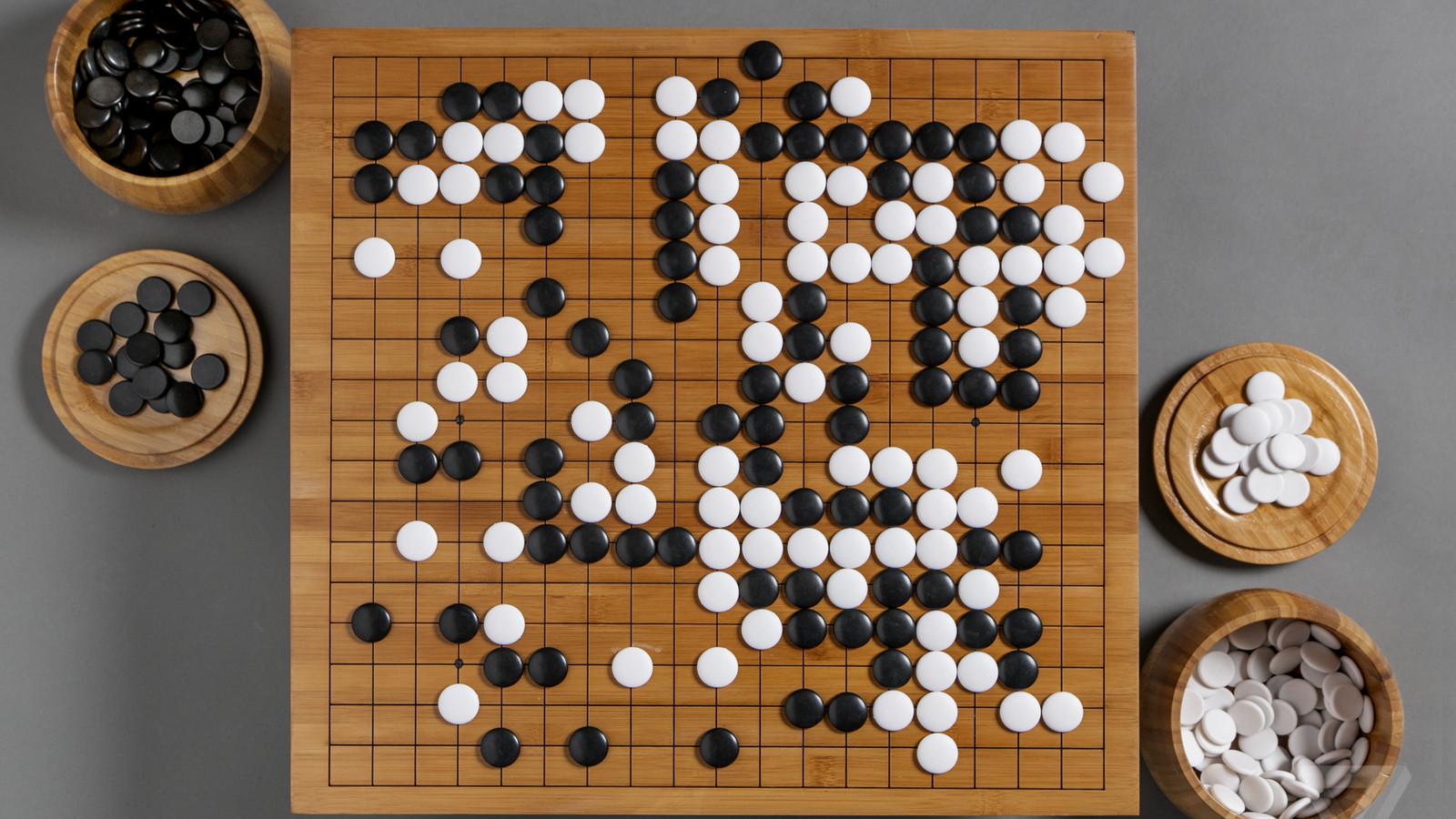

Only four years ago did computer AI’s reach a level of sophistication enough to beat humans at GO. In March 2016, when Deepmind’s AlphaGO beat Master GO player Lee Sedol 4-1, the world of GO and computer science was altered forever. The ancient Chinese board game of GO has eluded computer scientists for decades. But in the last four years, using GO and other games, Artificial Intelligence (AI) development has grown exponentially.

AlphaGO vs. Lee Sedol (source)

It is safe to say that we are currently living through the imminent dawn of a higher intelligence, and it is not human. In this article I would like to briefly examine how we got to this point, what it took to get here, how playing games accelerated this path, and the implications of the possible direction it is taking us. AI is going to change our lives whether we want it to or not. Keep reading to know if you are ready for this change.

Are you into Podcasts?

Come chat with your friends and all your favorite podcasters!

- Create a profile

- Follow podcasts

- Follow your friends

- Start discussing all your favorite episodes!

How We Got to AI in Gaming

The first notion of AI began in antiquity with varying myths of automatons. The Greek story of Talos the bronze giant built to protect the island of Crete from pirates and invaders is one such myth. Another progenitor of AI myths is the Jewish folktale of the Golem thought to be conjured when needed to protect villages, or simply do a few chores around the hovel. Many other concepts of early animated automatons came about over the centuries; including the famous android built by Leonardo Da Vinci, who presumably paraded the “being” around during a celebration hosted by Ludovico Sforza at the court of Milan in 1495.

Lifesize Da Vinci Automaton functioning recreation. (source)

The idea of AI has been incubating in the human imagination for centuries, so it didn’t take much to inspire the pioneers in computer science to begin formulating ways to make it a reality. In the 1930’s two famous mathematicians, Alan Turing and Alonzo Church, proposed a computation theory that digital computers would be able to simulate any process of formal reasoning. Thus, the real-world application of mathematical theory in a digital apparatus could create artificial intelligence.

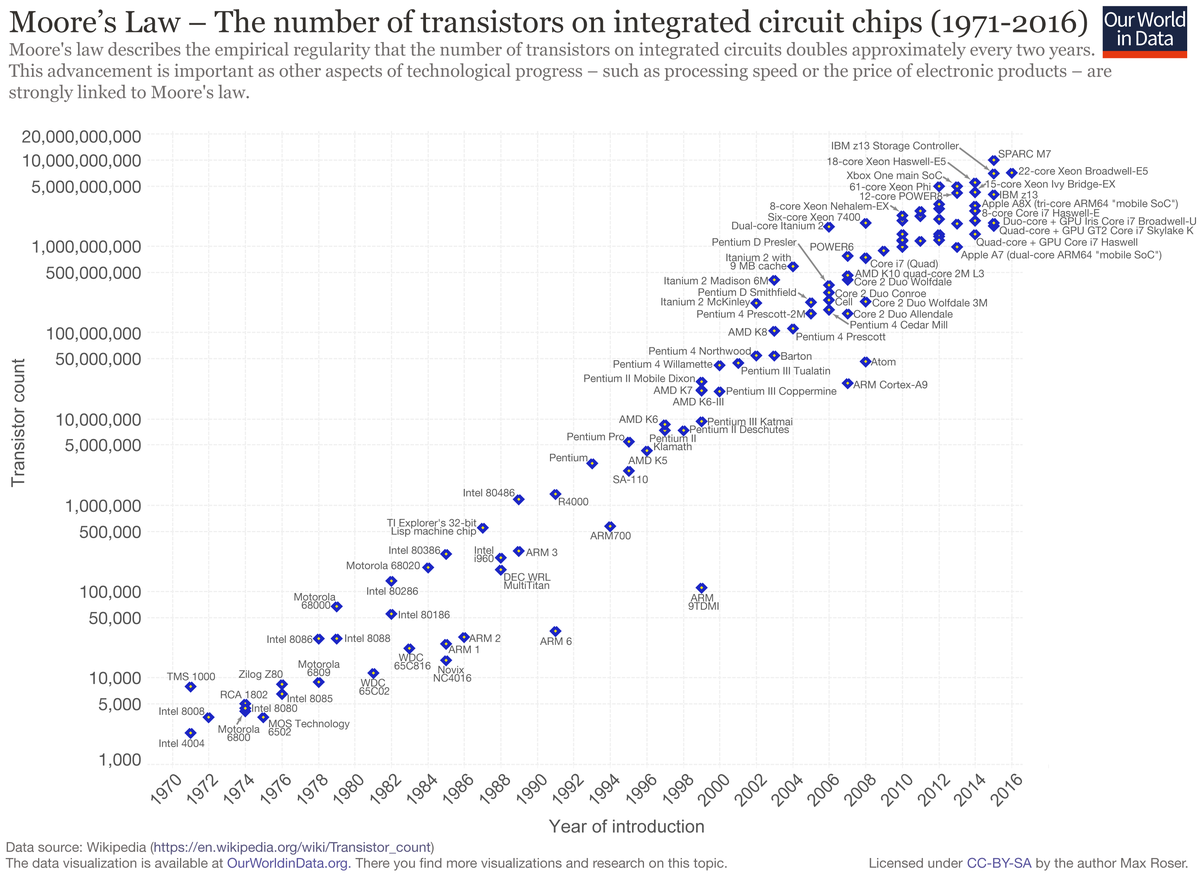

After WW2 it wasn’t long before the computer science field of AI research was born. Progress was slow over the mid 20th century, mired by ups and downs from funding and engineering hurdles. By the 1990’s computational power (predicted by Moore's Law, a doubling of transistors on chips every two years) had finally caught up with many real-world demands. Entering into the early 21st century we began developing new approaches toward AI.

Machine Learning in Gaming

At the end of the 20th century a subfield of AI research called machine learning began to take shape. Machine learning focuses on the use of large sets of data to make predictions and improve accuracy over time. This new approach to AI can utilize neural-networks and competitive-learning techniques to grow; which basically means that we make the computers teach themselves.

The fun and interesting thing about machine learning, and especially competitive-netorks, is that you can basically program an algorithm to compete against itself and sharpen its skills, ad infinitum, in any perceivable “game” which has logical rules to follow. The scary part about this is that it invariably will far exceed human performance, practically overnight. Some research groups have actually taught their machines the game of computer chip design. Teaching machines to play the game of chip design adds another exponential growth potential to AI development. It won't be long before AI is the primary source for developing itself.

A literal machine learning. (source)

By applying these machine learning techniques to AI development we have been able to solve previously impassable obstacles, such as playing the game of GO. The game of GO has a nearly unscalable number of legal playable positions, far outnumbering the number of atoms in the universe. It was thought that computers could never brute force calculate a GO game. However, with machine learning techniques computers didn’t have to brute force calculate the game of GO. Rather a novel machine learning approach to predictive computation was able to “intuit” beneficial GO moves more accurately than humans.

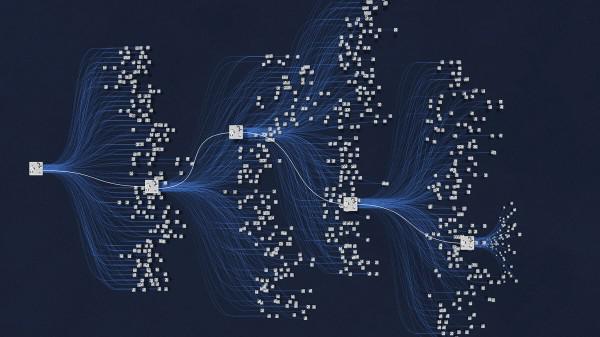

From the movie AlphaGO graphically depicting AlphaGO’s machine learning decision pathway.

DeepMind's AlphaGO and Lee Sedol

Deepmind, founded ten years ago in the UK, has been developing high level AI with an emphasis on machine learning and neural-networks. As a cutting edge AI company they were acquired by Google in 2014, and became a wholly owned subsidiary of Alphabet Inc. in 2015. Deepmind has created neural-networks capable of learning how to play games similar to humans, as well as, Turing-machines, a computer that mimics the short term memory of the human brain.

From March 9th-15th, 2016, Deepmind was made famous with their GO playing AI, AlphaGO, when they challenged 9th Dan Master GO player Lee Sedol, and won 4-1. At the time it was generally thought that AI development wouldn't be able to defeat humans at GO until roughly 2022. Deepmind beat expectations by many years ahead of schedule. This abrupt shock to the world of AI especially triggered an awakening in the East, primarily China who has now dedicated billions to AI research.

Lee Sedol contemplates his next move against AlphaGO. Image from the documentary AlphaGO.

Lee Sedol, still to this day, considers his single victory against AlphaGO his crowning achievement throughout his GO playing career, calling it a “...victory for humanity.”

Use of Games in AI Development:

Scene from the 1983 movie War Games. Where a rogue AI takes over NORAD in order to play a game.

Utilizing the development strategies from machine learning to overcome challenges posed by games has greatly accelerated AI research. The unique quality of a game is in finding novel ways to master it. With machine learning techniques a neural-network can essentially teach itself the rules of each game and quickly master any game beyond human capabilities. Often, the AI will develop strategies to beat the games in ways humans had never conceived of before, and in many cases do so beyond our ability to comprehend how or why.

Since the “real world” is a difficult sandbox for AI to play in, being able to play games has been a relief for researchers. Games like chess, GO, DotA 2, StarCraft, Jeopardy, and even poker are all able to challenge AI with massive amounts of data and unconventional reasoning obstacles. Some games require more analytical reasoning skills, such as chess, and others may require language comprehension, as with Jeopardy, or even our very own human intuition like in GO and poker.

Chess AI & Deep Blue, Kasparov in 1996

Before there was AlphaGO, there was Deep-Blue. IBM’s Deep-Blue was the first computer chess engine to properly defeat the human chess world champion, Gary Kasparov, on February 10th, 1996. However Kasparov was ultimately able to come out on top of the match winning 4-2. But by May of 1997 Deep-Blue was upgraded, and defeated Kasparov in the pivotal rematch that changed how humans perceive computer intelligence forever.

An exasperated Gary Kasparov contemplates his next move against Deep-Blue, on May 4th, 1997, in New York. (source)

Kasparov described the experience of playing Deep-Blue as an “alien opponent” but later reduced it down to being no more “...intelligent as your alarm clock.” The IBM computer scientists believed that playing chess was a good metric for the progress of AI development. Some rumors suggested that IBM was simply orchestrating a media scheme to raise their stock. Their stock did go up, and kept climbing until the dot-com bubble.

We now take our computer chess programs for granted but it should be recalled, especially by younger generations, that before this defining moment in history most of humanity believed that computers would never be capable of defeating humans at our own games. IBM’s Deep-Blue changed all of that.

IBM's Watson on Jeopardy in 2011

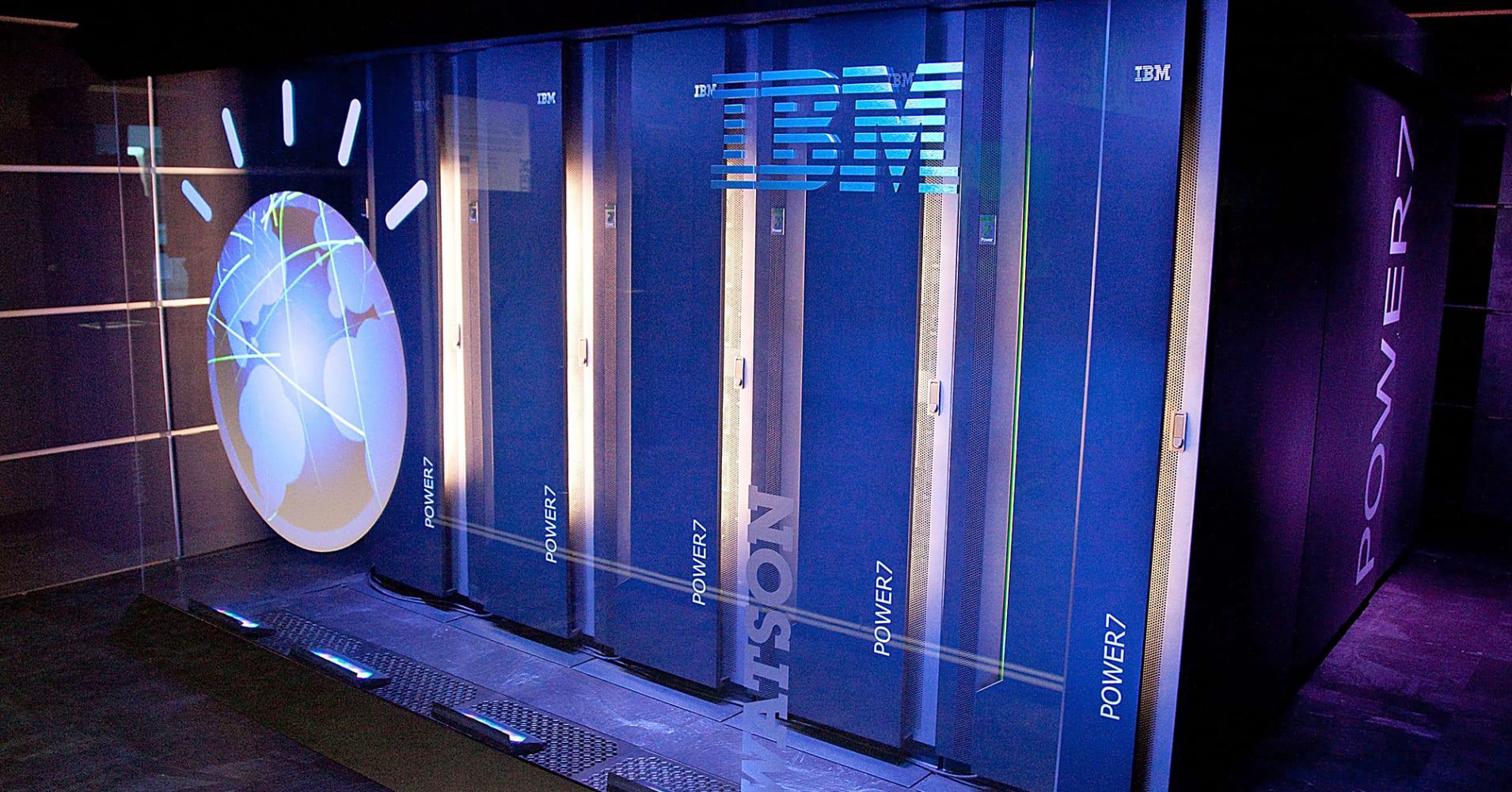

Some of IBM Watson’s computing systems. (source)

IBM strikes again in 2011 with Watson. The computer system was created to showcase advanced natural language processing by answering questions on the quiz show Jeopardy. Watson competed on Jeopardy and won against champions Ken Jennings and Brad Ruttler. To accomplish this the IBM team working on Watson supplied it with millions of documents, dictionaries, encyclopedias, literary works, and much more, including massive databases and other reference material that it could construct its knowledge from. Like Deep-Blue, Watson represented another major milestone in AI development, and things were only just beginning.

DotA 2 Video Game & OpenAI with Elon Musk

Considered a competitor to DeepMind, OpenAI, was founded in 2015 by Elon Musk and others to conduct AI research, with the stated goal of promoting friendly AI in a way that benefits humanity as a whole. They develop many forms of AI, including something called generative models which may soon be writing online articles, movie scripts, novels and more.

Esports arena in Seattle featuring DotA2 in 2017. (source)

In San Francisco at a live exhibition match in April 2019, OpenAI’s deep learning AI called OpenAI Five, defeated OG, the reigning human world champions of the game DotA 2. The video game DotA 2 plays two teams of five against each other in a real time strategy setting. OpenAI Five are five curated bots specifically designed to play DotA 2. This means the AI bots must separately coordinate their efforts during the competition to qualify as a team of five players. Not only are these AI bots defeating humans at real time strategy, but they are also constructively assisting one another displaying teamwork in the process. The bots’ final public appearance had them playing 42,729 games over a four day period during an online competition. They won 99.4% of those games.

Elon Musk has been largely cautionary over AI. Describing it as “...summoning the demon” which we may not be able to contain. His concern over AI’s destructive potential is what largely inspired him to start OpenAI. Through the grapevine it is heard, Musk recommends people reading these three books to develop a better general understanding of AI’s potential: Nick Bostrom’s Superintelligence, Max Tegmark’s Life 3.0, and James Barrat’s Our Final Invention.

Artificial Intelligence in Poker - DeepStack

High Stakes game of poker with a pair of Aces.

A team from the University of Alberta, Canada, combined deep learning algorithms to create an AI called DeepStack to play and win at Texas Hold ‘em. Poker was considered a more complex game for AI to master because of the imperfect information that goes into “guessing” the cards of the opponents. They trained DeepStack using over ten million poker game scenarios. After playing 44,852 games against itself, DeepStack was playing at a level ten times what professional poker players consider a sizable margin. Relying on its neural networks DeepStack played and beat professional poker players from the International Federation of Poker.

AI for Playing StarCraft and AlphaStar

A look inside AlphaStar’s mind. Image credit Blizzard.

Deepmind’s second coming, AlphaStar, is designed to play StarCraft 2. Because of the hidden nature of opponents in the real time strategy game StarCraft, it was thought that it would be more challenging to AI than traditional board games like chess and GO. Unlike AlphaZero (AlphaGO’s predecessor), AlphaStar first learned to play StarCraft 2 by copying the strategies of the best players in its human database.

In StarCraft 2 there is a lack of initial information of the game environment and exploration is needed to discover the game world. By copying human player’s strategies the AI was able to circumnavigate the difficulty of “figuring out” how to explore by itself. After AlphaStar developed its exploration abilities it played itself using competitive-learning techniques. Additional adversarial bots were introduced in its training to expose weak spots in its strategy. AlphaStar gained Grandmaster status playing StarCraft 2 in August 2019.

Game of GO and Artificial Intelligence

AI researchers are not done with GO yet! After AlphaGO, a tsunami of interest reinvigorated both the GO community and the field of AI. Many machine learning scientists jumped on the bandwagon to use GO as an experimental platform for AI research. Rightly so, because even though AI is now sophisticated enough to consistently defeat humans at playing GO, the game of GO itself has still not been fully conquered by AI. GO is a game that derives great complexity from very simple rules. Children may learn to play GO at a very young age, but then spend multiple lifetimes trying to master it. The sheer scale of possible legal positions in GO makes it a game (unlike chess) where the end is virtually incalculable.

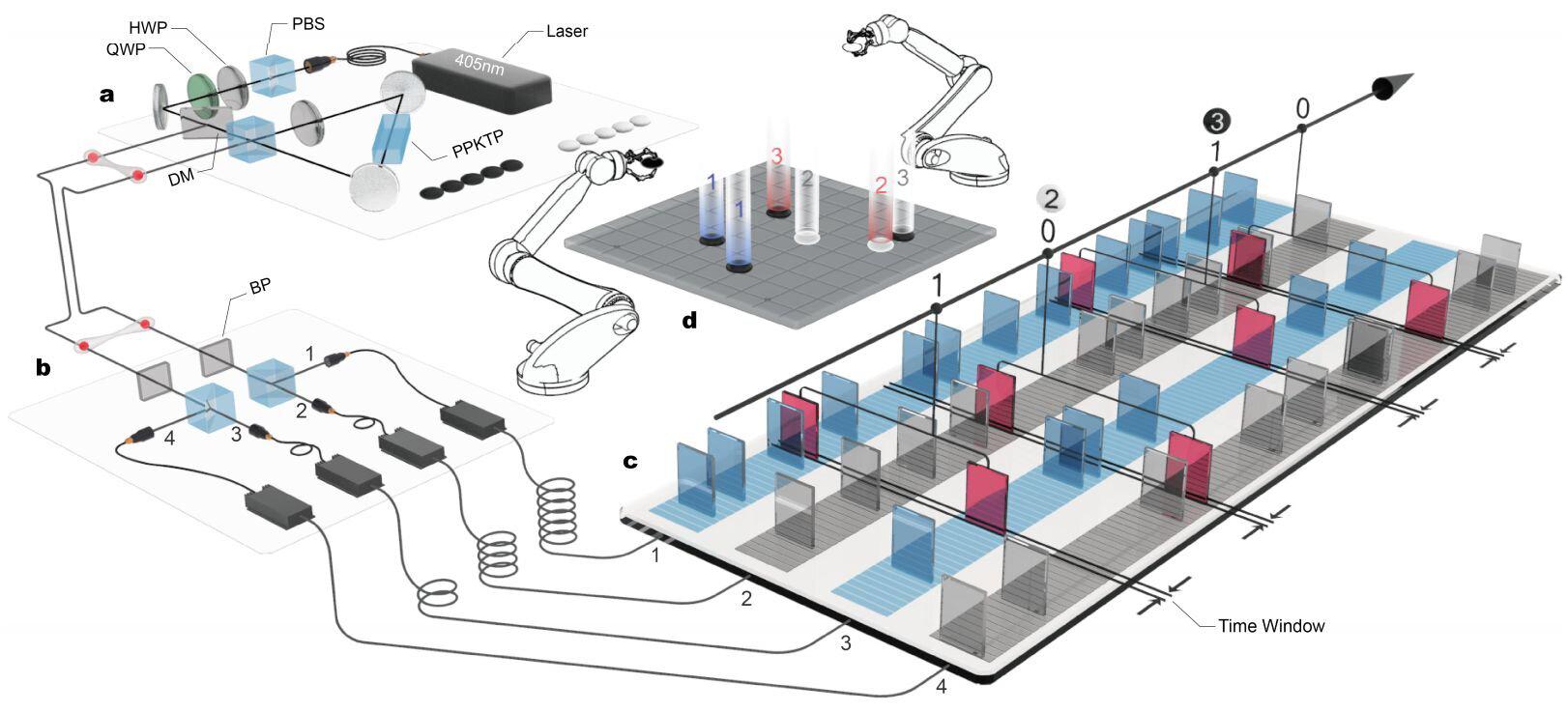

Artist’s depiction of the Quantum GO Machine. (source).

In spite of the presently incalculable scale of GO, researchers are already devising new ways to utilize GO for future AI development. A research team in China has created a form of “Quantum-GO” which uses entangled photons, acting as GO stones, to add exponentially more legal positions and complicated variables. By perpetually entangling each move as the game progresses they are able to add the element of randomness and incomplete information. The researchers hope to apply quantum-GO in training more advanced AI as technology increases. They expect the advent of quantum computing to create this demand.

GO AI Tournaments Around the World

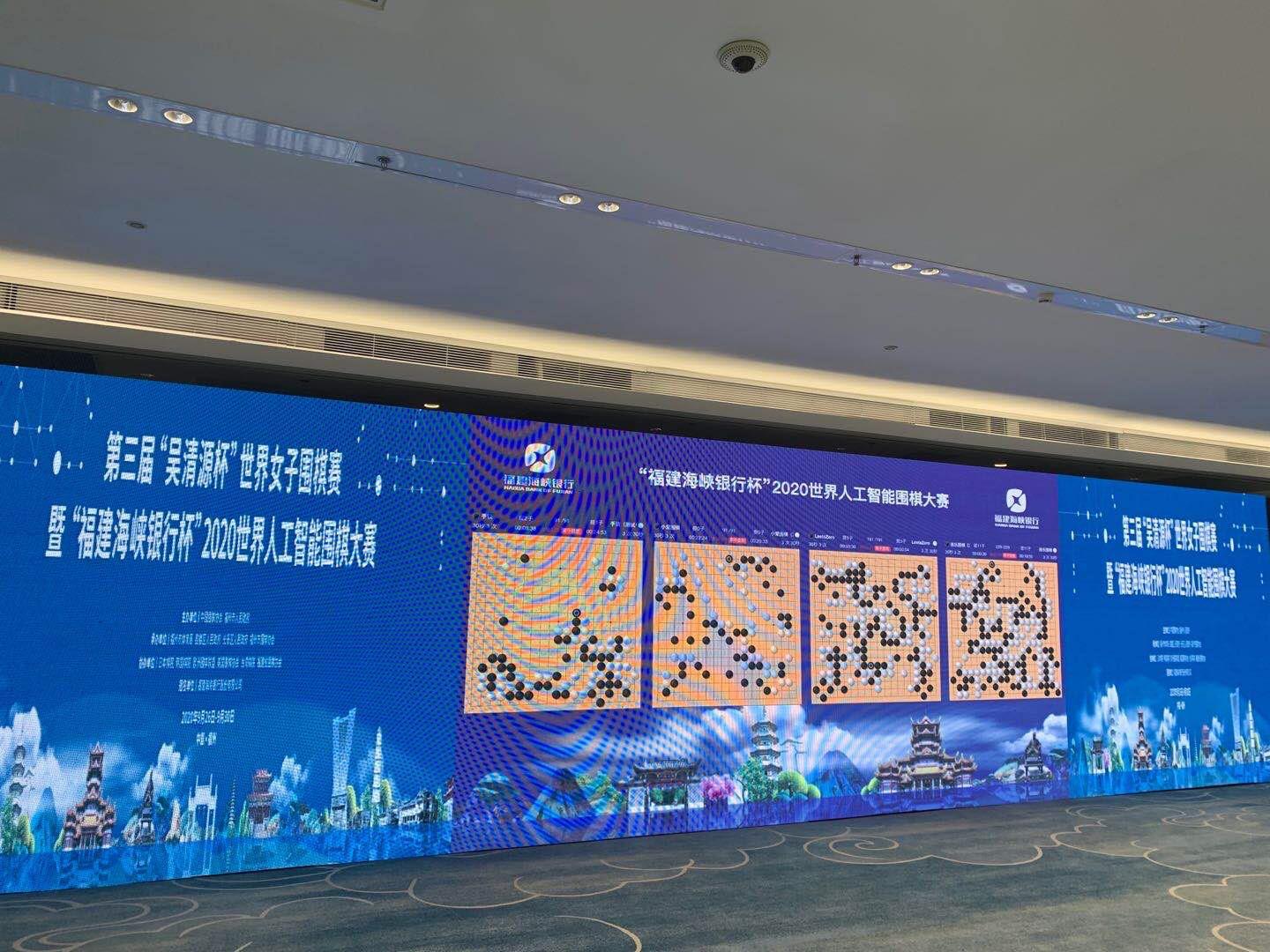

Scene from the “Haixia Bank of Fujian Cup” 2020 World GO AI Open Competition floor.

Before quantum computing takes over the world, the ancient game of GO is being reinvigorated for the 21st century. Teams around the world are building GO engines to compete against each other in the World GO AI Competition. As part of the greater Chinese initiative to increase interest in AI development, China is organizing these GO AI tournaments to test algorithms and encourage constructive competition. By playing GO engines against one another in this competition, developers are able to uniquely challenge their algorithms in ways they’re unable to in the lab. The 2020 World GO AI Competition is being held from September 27 to December 30.

Where is AI Going:

The AI connectivity of the Internet of Things. (source)

We are at the cusp of the world before AI, and the world forever changed by AI. The 21st century has brought many radical shifts in our culture, and the ubiquitousness of AI will arguably become the most profound shift of all. With 5G just now coming online we will begin to see the internet of things take over our lives. In the world of the internet of things, our refrigerators will know when we're out of milk and automatically order us a new carton online, having it shipped directly to our door, or hand delivered to our refrigerator; this in turn will all be monitored by an AI system which manages our homes and daily lives. Likely these AI systems will be trained using games.

The internet of things and having AI in your pocket is the very present future. In the deep future we can only begin to imagine the full impact AI will have on the world. Over the next century it is likely that any skill a human can perform, an AI will do at a superhuman level. Every job that reasonably exists for humans presently, will be replaced by higher functioning AI automation. The trend in AI development suggests that any obstacle of reasoning will eventually be overcome by AI. Even art, music, fiction, and other creative forms are beginning to be replicated by AI. Naturally, it is expected that people will forever retain a taste for good old fashioned hand crafted human hard work. Nonetheless, the machines are coming.

I Think, Therefore I am AI?

Scene from Stanley Kubrick's 2001 A Space Odyssey where the computer system HAL 9000 is becoming self-aware. (Fun Fact: HAL is a wink at IBM. As each letter in HAL is only one letter behind IBM in the alphabet.)

When considering the implications of AI one cannot pass up the opportunity to conjecture over the potential sentience of thinking machines. If commander Data from Star Trek were to one day walk down our streets, would we begin to question whether androids deserve equal human rights? How do we compare the value of the life of thinking electronics to the value of thinking biologics? If human consciousness is an emergent property of our brains (still up for debate), then could consciousness emerge out of similarly complex computational minds found in machines? Fortunately it is more fun to ask these questions than to answer them, so I will leave you guessing if one day your GO program will develop self-awareness.

An Ethical Consideration of AI

During the Manhattan Project scientists convened to discuss whether they should or should not make the atom bomb. As we know from history they ultimately decided creating the atomic bomb was the most ethical option they had at the time. Some may look down on them for this decision, but they did not make it lightly or without deep introspective forethought. We face a similar ethical dilemma in our time.

Scene of terminators sent by Skynet (the evil AI) to seek and destroy humans, from the Terminator franchise.

The advent of AI, as opening Pandora’s Box, will bring upon us many troubles and blessings alike. We will have to decide how we approach this power. Are humans mature enough to wield infinite intelligence? Probably not. Nevertheless, we are approaching a future where somebody will bring AI into its ultimate fulfillment. It may be yet that there is one thing that can never be programmed, shedding light on our own failures, that which is wisdom.

As wisdom can never be artificial; The ancient masters who brought us the game of GO would know.

Are you into Podcasts?

Come chat with your friends and all your favorite podcasters!

- Create a profile

- Follow podcasts

- Follow your friends

- Start discussing all your favorite episodes!

Conclusion

Artificial Intelligence still has a ways to go. We really do not know where it will take us. And that is exciting! Teaching AI with games will forever embed a hint of humanity within its cyber DNA. With some restraint and wisdom, and a little GO playing, we will surely see good days ahead. For both an exploration into GO and AI, sign up to play the Game of GO and challenge friends and AI bots alike.

The Game of GO may yet still surprise us in ways AI will never understand. Keep Playing! (source)